Artificial Intelligence and the use of Large Language Models has been integrated in our daily lives, simplifying previously time-intensive tasks (like sifting through reviews on Amazon) and enabling behind the scenes without us even knowing it. Yet higher ed advancement teams still approach the technology with skepticism and caution – and why wouldn’t they? The idea of compromising security and leaving your donors personal information susceptible to harm and misuse is nothing to take lightly.

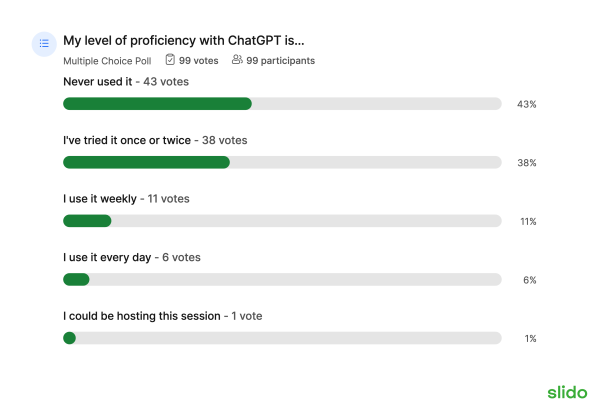

We recently held a training session for a national public university with over 100 advancement professionals. When we surveyed their proficiency with ChatGPT the results showed that a majority had either never used it or had tried it only once or twice.

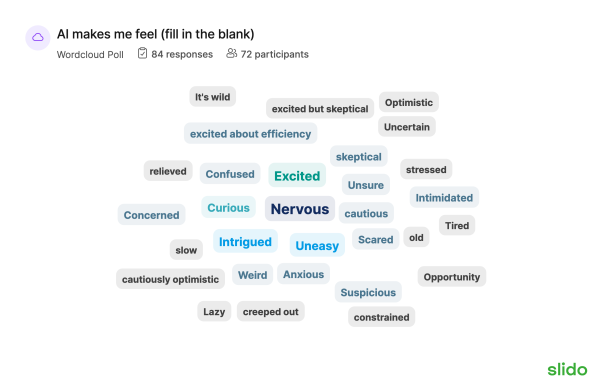

And when asked how they feel about AI more broadly, “Nervous” was the most common response.

It’s clear there is a level of fear and trepidation hindering the ability for the advancement industry to embrace all that AI and machine learning has to offer. Maintaining the privacy and security of your institution’s most precious relationships is of the utmost importance.

We’re here to tell you that leveraging Large Language Models (LLMs) and generative AI within your organization – with data privacy at the forefront – is possible.

Here’s a step-by-step guide for techniques and best practices to help you make the most of this game-changing technology ethically and responsibly:

- Understand Data Privacy Requirements:

Start by tracking down your institution’s data privacy requirements, including any regulatory obligations such as GDPR, HIPAA, or industry-specific standards. This will help you understand the necessary constraints for handling data.

- Data Minimization and Anonymization:

To protect sensitive donor data, ensure that you minimize the amount of information provided to the LLMs and anonymize data points when necessary. Remove or obfuscate personally identifiable information (PII) or other sensitive details before feeding data through the AI model.

Examples of personally identifiable information (PII) include; Social security number (SSN), passport number, driver’s license number, taxpayer identification number, patient identification number, and financial account or credit card number; as well as full name, personal address, phone number, and detailed giving information.

- On-Premises or Private Cloud Deployment:

Consider deploying LLMs and generative AI models on your own servers or in a private cloud environment. This allows you to have more control over data access and reduces the risk of exposing sensitive information to external parties.

- Federated Learning:

Implement federated learning techniques, which enable model training on decentralized data sources without centralizing sensitive data. In this approach, the model is sent to the data sources, trained locally, and only model updates are shared, not raw data.

- Differential Privacy:

Apply differential privacy mechanisms to the data or the model itself. This adds noise or randomness to the data or model parameters to protect individual data while still allowing for meaningful insights.

Example: Suppose you have a dataset of people’s ages and you want to calculate the average age without revealing the ages of individual people. Applying differential privacy to this scenario might involve adding random noise to each person’s age before computing the average. This ensures that even if someone tries to analyze the noisy result, they won’t be able to accurately determine the age of any individual in the dataset.

- Secure Data Transmission:

Ensure that data sent to and from the AI models is encrypted using strong encryption protocols. Use Virtual Private Networks (VPNs) or secure channels to protect data during transit.

- Access Control and Authentication:

Implement strict access control mechanisms and strong authentication to restrict who can interact with the AI models and access sensitive data. This can include role-based access control and multi-factor authentication.

- Auditing and Monitoring:

Continuously monitor access to the AI models and data. Implement audit trails and logging to detect any unauthorized or suspicious activities.

- Secure Model Deployment:

Secure the deployment of the AI models by using secure containers or virtualization technologies. Regularly update and patch these environments to address security vulnerabilities.

- Regular Security Assessments:

Conduct regular security assessments, including penetration testing and code review, to identify and address potential security weaknesses in your AI infrastructure.

- Data Retention and Deletion Policies:

Establish data retention and deletion policies to ensure that data is only stored for as long as necessary. Implement secure data disposal procedures.

- Employee Training and Awareness:

Train your employees about the importance of data privacy and security. Educate them about best practices for handling sensitive data and AI models. This is something Advancement Services can do independently or in collaboration with a central IT team.

- Legal Agreements and Contracts:

When working with third-party AI providers, ensure that you have robust legal agreements and contracts in place that specify data handling and privacy requirements.

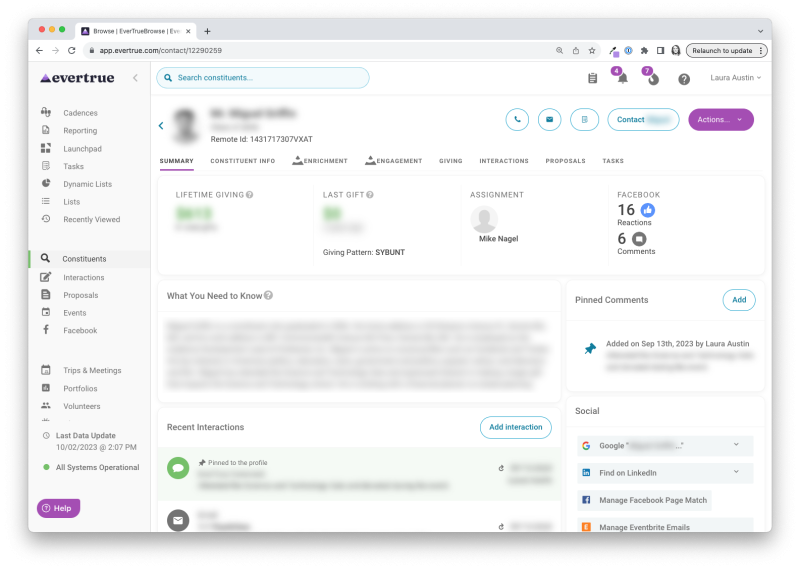

Note: EverTrue uses the OpenAI API to power features like mobile AI constituent summaries, available in the EverTrue mobile app. The terms of service include multiple protective clauses along the lines of those referenced above. For example, no data sent or received from the API can be used for training or improving the model, so the data remains private. EverTrue’s contract with OpenAI includes more detailed clauses and privacy stipulations surrounding the API data transfer to protect our partners’ data. Chat with us to learn more about about EverTrue’s AI-powered features.

- Red Team Testing:

Consider conducting “red team testing”, where ethical hackers simulate attacks on your AI systems to identify vulnerabilities and weaknesses.

- Regular Compliance Audits:

Periodically review and audit your AI systems to ensure they comply with data privacy regulations and your internal policies.

By following these steps, you can leverage the power of Large Language Models and generative AI while minimizing the risk of exposing your data to external parties and ensuring data privacy and security within your organization. Remember that data privacy is an ongoing process, and it’s essential to stay vigilant and adapt to evolving threats and regulations.

More Resources:

APRA Connections:

“Five Ways Prospect Research Can Evolve in the World of AI”

NTEN’s

Nonprofits and Artificial Intelligence Readiness Checklist